AI Rat Image With Enormous And Embarrassing Flaw Makes It Past Scientists, AI Writing Academic Papers?

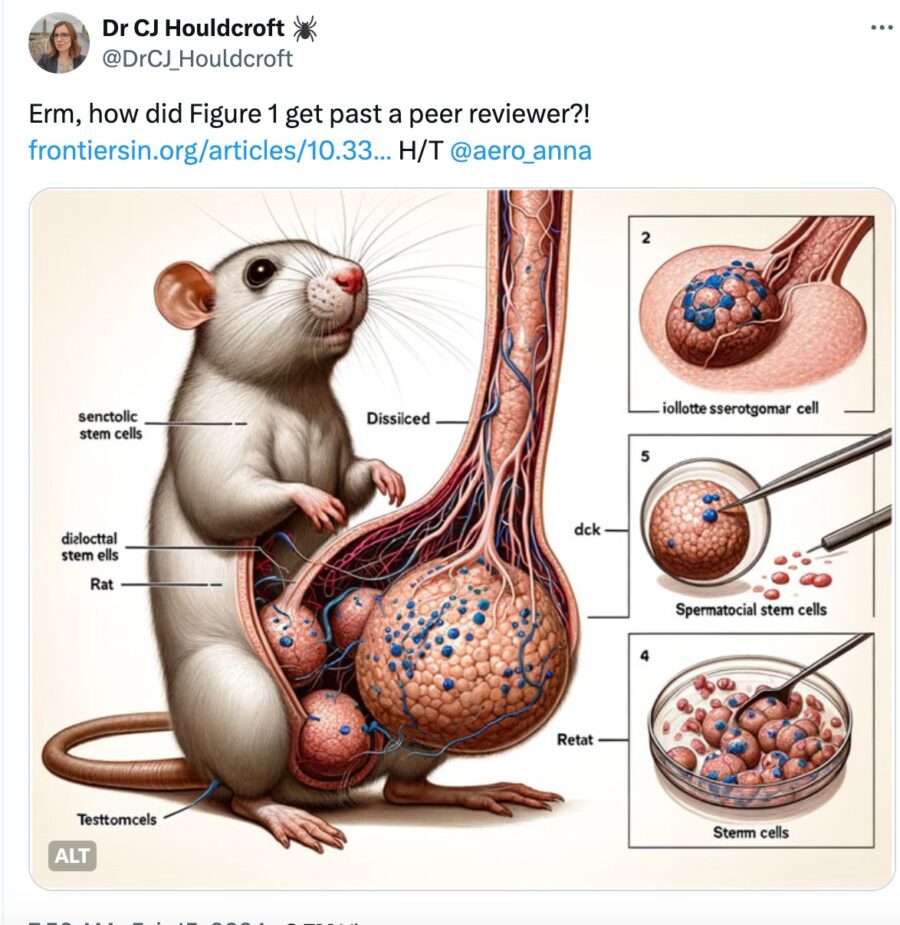

A scientific journal called Frontiers in Cell and Developmental Biology recently came under scrutiny for a paper titled “Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway.” Not only did it feature the latest findings on sperm stem cells, but it also showcased an astonishingly inaccurate AI-generated illustration of a male rat’s reproductive organs.

Midjourney Has Weird Ideas About Rats

The paper featured three supposedly illustrative images, all generated by Midjourney. The most glaring error was found in the first AI-generated figure, intended to depict “spermatogonial stem cells, isolated, purified and cultured from rat testes.” However, what the image portrayed was a white rat with an anatomically exaggerated and wildly incorrect reproductive organ.

The image was inexplicably magnified and revealed a bizarre collection of unidentifiable organs. Aside from the glaring anatomical inaccuracies, the labeling in the AI rat image included terms like “testtomcels,” “diƨlocttal stem ells,” and “iollotte sserotgomar cell” which don’t exist in the realm of rodent reproductive biology.

Was Everyone Asleep?

Even for someone unfamiliar with rat anatomy, the absurdity of these labels would be a red flag. The fact that such inaccuracies made it past the editorial team and two other reviewers raises concerns about oversights in academic publishing. Six individuals, including an editor and two reviewers, approved the article without recognizing the glaring flaws in the AI rat illustrations.

In response to questions regarding the ridiculous AI rat image, one reviewer stated that their responsibility was limited to assessing the scientific aspects of the paper, not verifying the accuracy of diagrams. Frontiers in Cell and Developmental Biology later released a statement acknowledging the concerns, stating that the article would be investigated.

This Does Not Bode Well

The paper has since been retracted, and the names of the editor, reviewers, and one of the authors have been removed pending the conclusion of the investigation. An inaccurate AI-generated rat image making its way into an academic paper sheds light on the growing concern surrounding the integration of generative AI in academics.

Scientific Integrity

Science integrity consultant Elisabeth Bik recently highlighted the alarming trend on her blog, stating that the incident exemplifies the naivety or complicity of scientific journals, editors, and peer reviewers in accepting and publishing AI-generated content. The AI rat figures not only compromise scientific accuracy but also raise concerns about the infiltration of more realistic-looking fake illustrations into scientific literature.

Bik emphasizes the potential harm that generative AI poses to the quality, trustworthiness, and overall value of scientific papers. The ease with which the botched AI rat illustrations passed peer review underscores the need for heightened scrutiny and awareness in academic publishing. Acknowledging the risks, some academic institutions are adapting their standards to the new AI reality.

Some Are Rejecting AI Outright

Nature, a prominent scientific journal, banned the use of generative AI for images and figures in articles in 2023. The decision was motivated by concerns about the integrity of data and images, as generative AI tools often lack transparency in providing access to their sources for verification.

The AI rat diagram is not the first time generative AI has led to embarrassing consequences in professional contexts. In June 2023, two lawyers were fined for citing non-existent cases generated by OpenAI’s ChatGPT for legal filings.

Source: Mashable