New Tech Knows If You’re Liberal Or Conservative Just By Looking At Your Face

A new type of facial recognition software has been unveiled that can tell if a person is liberal or conservative simply by looking at their face.

This article is more than 2 years old

Scientists at Stanford University have performed a facial recognition software study. They found that a simple algorithm is able to tell whether a person is liberal or conservative, just based on how the program processes a closeup photo of your face. The study, conducted by Michal Kosinski, was released in the Nature journal this week.

We live in the future, and sometimes, it is unsettling. We were promised flying cars, and instead, we got a lot of questions about whether computers are working for us, or against us. In this case, the question surrounds artificial intelligence, facial recognition, and privacy rights. How do we feel, as a society, about the idea that software can look at our faces and tell others whether we are liberal or conservative? The very implication that your facial features could indicate your voting patterns and deeply held beliefs is insulting. Except, we have the data, and it’s apparently just a fact.

Do liberals and conservatives smile differently? Is it in our eye color? The way we style our hair? This part is actually quite disturbing: the technology is not hyper-advanced at all. Kosinski and his team took a million photos from social networks, like Facebook and dating apps, from people in the United States, Canada, and the United Kingdom. They chose sites where people disclose their political affiliation as part of the signup process. The photos were given to a machine learning system with an algorithm based on open-source facial recognition software. The program cropped the photos to just the face so there is no information in the background of the photos.

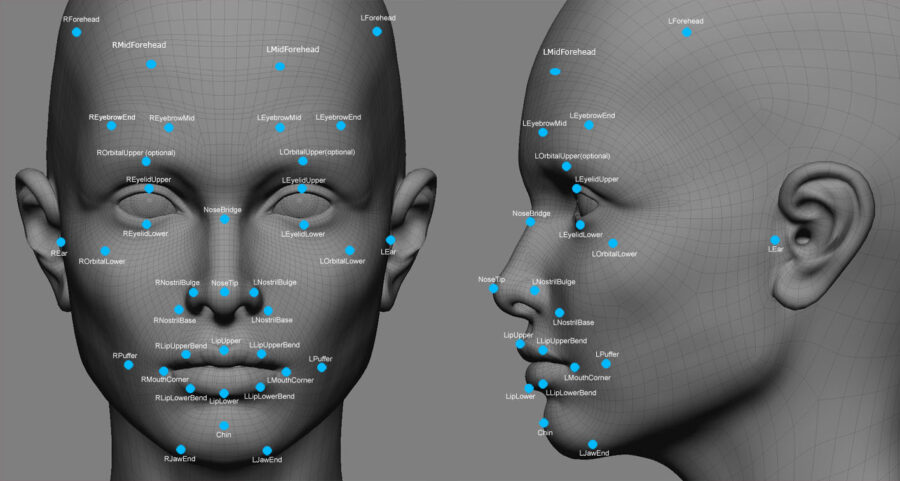

The facial recognition software scores people’s faces based on 2,048 features – a lot more than the naked eye would take in when looking at another person’s face. In fact, the computer isn’t thinking like a human brain would, but rather, categorizing these scores based on computer-native thinking. Then the facial recognition software, armed with this data, was able to look at new photos and determine political affiliation.

This isn’t the first time Stanford University’s Michal Kosinski has alerted us to what facial recognition software can do. In 2017, he released information on his work showing that a person’s sexual preference can be predicted this same way. They believed the facial recognition software wouldn’t be capable of this determination, and moreover, they debated whether they should even release the information. Ultimately, they decided it needed to be publicly known for everyone’s safety. Michal Kosinski released the following statement about their findings:

“We were really disturbed by these results and spent much time considering whether they should be made public at all. We did not want to enable the very risks that we are warning against. The ability to control when and to whom to reveal one’s sexual orientation is crucial not only for one’s well-being, but also for one’s safety.”

“We felt that there is an urgent need to make policymakers and LGBTQ communities aware of the risks that they are facing. We did not create a privacy-invading tool, but rather showed that basic and widely used methods pose serious privacy threats.”

It’s hard to predict everything this facial recognition software could be used for, but the implications could be massive. But is this the kind of data we want to be known? Does privacy matter in the computer age? Who is monitoring facial recognition technology? Technology is advancing much faster than laws monitoring it. Elon Musk has warned about artificial intelligence a lot over the past several years. Musk has said, “Mark my words, A.I. is far more dangerous than nukes. So why do we have no regulatory oversight?”

While no one is really regulating this technology or doing much to protect the privacy of everyday people yet, the money backing the facial recognition market is huge. Allied Market Research estimates the market will grow to $9.2 billion by the year 2022.

So what does Michal Kosinski at Standard think about facial recognition? He thinks we should be warned. On the release of his latest study, he said, “Don’t shoot the messenger. In my work, I am warning against widely used facial recognition algorithms. Worryingly, those AI physiognomists are now being used to judge people’s intimate traits – scholars, policymakers, and citizens should take notice.”