YouTube Refuses To Ban Live-Stream Video Of Boulder Shooting

YouTube is denying requests to pull a controversial streamed video of the Boulder shooting.

This article is more than 2 years old

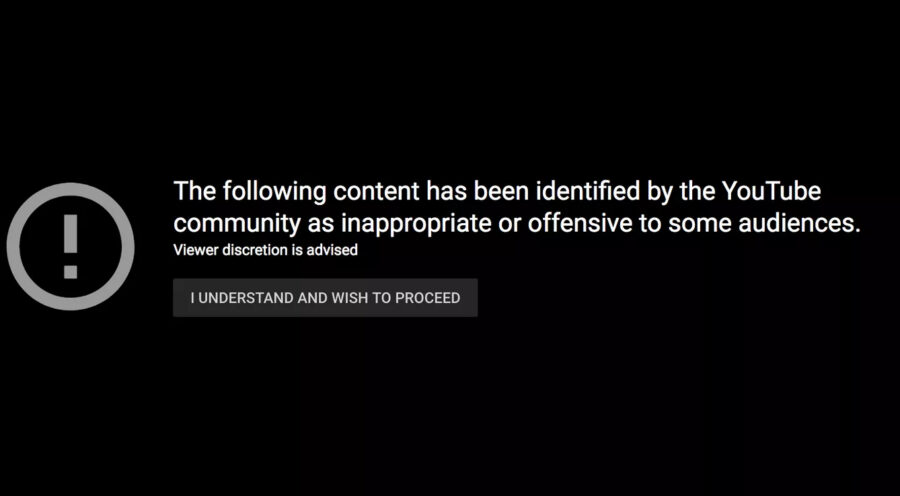

Traditionally, YouTube and other social media platforms have removed content that shows graphic violence. Most would likely assume that a three-hour-long livestream of a mass shooting, which shows the bodies of victims, would be removed under those policies. Shocking many, the social media company has decided to apply an age restriction to the video in question instead.

What in the world are they thinking? Well, The Verge asked them, and YouTube spokesperson Elena Hernandez responded. “Following yesterday’s tragic shooting, bystander video of the incident was detected by our teams. While violent content intended to shock or disgust viewers is not allowed on YouTube, we do allow videos with enough news or documentary context.”

There are a lot of angles to this controversial decision. One of them is definitely what is considered to be “documentary context” or journalism. Another aspect is what social media companies are responsible for.

The video in question was a livestream. It was aired by Dean Schiller, who calls himself a citizen journalist. Schiller runs the YouTube channel ZFG Videography. He follows local events, mostly involving the Boulder police. He’s had run-ins with them previously where he has been arrested and consequently sued them. When he heard the gunshots at King Soopers supermarket, he ran toward them and turned on his camera. During the three-hour livestream (which you can watch here), he argues with police who tell him to leave the area, records dead bodies, speculates on the motives of the shooter, and gives information on tactics the police are using as they are actively on the scene.

According to Vice, 30,000 people watched the shooting in Boulder, Colorado live. Ten people were killed during the incident. YouTube is requiring users to verify their age before viewing the video, which means they have to login. They’ve said they’re keeping an eye on the situation with the content, but haven’t suggested taking any further measures to moderate it.

Many commenters on YouTube have criticized Schiller for not calling 911 or helping people on-site as they are trying to get away from an active shooter. Others have criticized him for the way he talks during the video, speculating on moves and filming the bodies.

While the YouTuber is a citizen journalist, that means they aren’t necessarily holding themselves to the standards of ethical journalism. In recent years, professional journalists have had more conversations about what it means to ethically cover these tragic events. There are websites with training on how to report on these events. Some of the basic rules include not speculating on motives, careful discussion on mental health, and not showing the bodies of victims as it can worsen the trauma for their families. They say that research has shown the way news outlets cover mass shootings can contribute to copycat events.

However, Schiller isn’t a professional journalist and YouTube is a social media company, not a news outlet. So they aren’t responsible for this. Or are they? They claim they are keeping the video on their platform due to “documentary context”, despite the graphic violence.

Social media companies have been forced to look at a number of ethical questions in recent years. Are they responsible for fake news spreading throughout their websites? How should they handle misinformation about a deadly virus? These are important moral questions for a type of company that is still new to the world. After all, they aren’t content creators. They’re platforms for content creators to use. They’re tech companies, not writers, videographers, or journalists. So what is their ethical responsibility as it pertains to the content on their platforms?

As companies like YouTube apply their policies over time, it does not appear to be consistent, and that may be the part that’s most frustrating to many. To be fair, this type of company is new. YouTube is figuring out for themselves what they consider to be allowed within their community guidelines. They were quick to remove content about election fraud in 2020. They are removing COVID misinformation. These acts would seem like they’re being more aggressive about their content removal policies, but their policies on graphic violence have been somewhat random. That could be brushed off, but in this case, it’s harder to do so. For the families of the victims of mass shootings, there is something particularly offensive about the removal of YouTube Ads that include drug use and bad words, while allowing livestreams of mass shootings.