Soldiers’ Emotional Attachments To Robots May Affect Their Decisions

This article is more than 2 years old

Robots have already begun to change human life in myriad ways, both measurable and immeasurable. People have domestic, social, romantic, and sexual relationships with robots, and the extent to which they’re integrated into the fabric of life will only increase. That inevitably means that humans will become more familiar with their robotic counterparts, and even though they’re not sentient and can’t feel emotion, humans often develop attachments to them. We get attached to computers, cars, and other trinkets, so it seems only natural that we’d get cozy with machines that serve, help, or otherwise make our lives easier.

Robots have already begun to change human life in myriad ways, both measurable and immeasurable. People have domestic, social, romantic, and sexual relationships with robots, and the extent to which they’re integrated into the fabric of life will only increase. That inevitably means that humans will become more familiar with their robotic counterparts, and even though they’re not sentient and can’t feel emotion, humans often develop attachments to them. We get attached to computers, cars, and other trinkets, so it seems only natural that we’d get cozy with machines that serve, help, or otherwise make our lives easier.

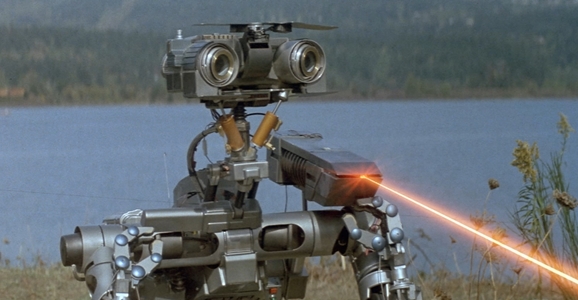

Developing attachments to robots isn’t necessarily problematic, so long as we can hold it together when our Roombas stop working. But emotional attachments to robots that serve alongside humans in dangerous situations might actually affect the outcomes. The use of robots for police and military endeavors, including search and rescue, is at the forefront of robotic development. And while some people object to the widespread use of robots (especially drones and autonomous machines) in battle, others believe it’s the best way to reduce human casualties in war. But that advantage could be mitigated if a sense of loyalty, protectiveness, or camaraderie changes the way people act toward robot helpers.

Julie Carpenter, a recent University of Washington doctorate student in education, did extensive research about what happens when people care too much about robots designed to serve in dangerous scenarios. Carpenter interviewed soldiers who work with bomb-defusing robots to gauge the impact of their “relationships” with the robots that are in harm’s way. The soldiers reported that when the robots were damaged or destroyed, they felt frustrated, angry, and sometimes sad. The soldiers also said that, based on how the robots moved, they could tell who was operating it, thus linking it directly to a human comrade.

Some soldiers said that they regarded the robots as extensions of themselves, and felt responsible when they couldn’t master certain maneuvers or weren’t deft enough. The soldiers said that these feelings don’t affect their decisions or performance, but Carpenter worries that, as robotic technology evolves, that might change. She hopes the military will at least consider the possibility and keep it in mind as they design new models of robots in the future. That might mean avoiding humanoid or animal designs.

Carpenter’s not the only person interested in testing the ramifications of humans’ attachments to robots. Robots’ rights activists use evidence of these attachments to argue that robots need certain rights or protections, much like animals, to prevent them from abuse. Even if the robots can’t feel or understand pain or abuse now, some activists believe that someday they will, and that what we do now will set the stage for that. Others believe that cruelty toward robots, much like cruelty toward animals, reflects the state of humanity, and might even impact the way humans treat each other. It’s fair to say that human-robot attachments are a double-edged sword, and an aspect of human life that will become more and more prevalent. Despite the drawbacks Carpenter points out, our connection to our robots may work to our advantage if the Kaiju come back.