New AI Predicts Most Crimes Before They Happen

There is a new AI crime-predicting program that is said to be quite accurate, however, the program could be criticized for being biased.

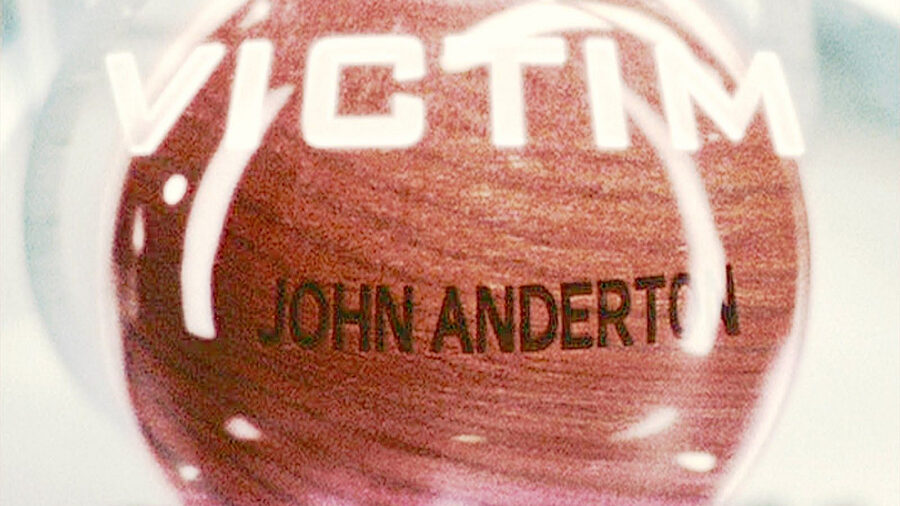

AI is evolving at an alarming rate. Plenty of robotics and tech places have developed cyborgs that can mimic human faces and emotions. Programs have been able to beat world-class chess champions, and even AI-generated images are now becoming a hugely popular thing. However, things have possibly gone a bit too far. It is now being reported that a new AI program can predict crime. If this is something you have heard about before, you likely watched the Tom Cruise film Minority Report. This same science fiction premise is being used to correctly predict crime before it even happens. We foresee something nefarious happening in the future.

This new AI crime model essentially is fed crime logs in a time frame from any given city. This data is then turned into an algorithm that the program learns. One of the cities that the program is being tested in is Chicago. Chicago releases the crime log for the computer, detailing where and when it happened. The city is then broken down into 90 square meters (1000 square feet), which can help the program determine when and where another crime of that nature might occur. The data has been surprisingly accurate. However, the data could already be biased already as Chicago is known to have quite a bit more crime than most other cities.

Professor Ishanu Chattopadhyay and his colleagues have developed an AI crime predicting system that has been used in eight major cities. What is astonishing about the data is that this program has been able to predict crime up to a 90% accuracy level. However, despite the accuracy of this new program, there are certain natural biases that people feel about using a program of this nature. For instance, would it be used to then pin a crime inaccurately on someone and see them wrongly thrown in jail? Chattopadhyay commented on that by stating, “We have tried to reduce bias as much as possible. That’s how our model is different from other models that have come before.”

One of the AI crime models that came before attempted to dial in the types of people who would commit crimes, which naturally led to plenty of criticism that the program was deeply flawed and racist. We can see why. Chattopadhyay’s program is attempting to get rid of biases and have these new time-stamped events be read more accurately. Still, it sounds a bit too much like Minority Report. If a neighborhood experiences gun violence every day in a week span, it would be safe to say that the following week might contain the same.

AI crime prediction might not be the safest course of action to take when attempting to rid an area of potential crimes. The program can accurately 90% of crime is impressive but sounds less impressive when it is being used in an area that might already contain more crime, to begin with. If the program could predict a crime in a town of 300 people, that would be much more impressive. For anyone thinking about committing a crime, the police might not be the only thing to worry about from now on. A crime-predicting cyborg could be the next thing chasing you down.