Virtual Reality Helps Scientists Read Robots’ Minds, Here’s How

This article is more than 2 years old

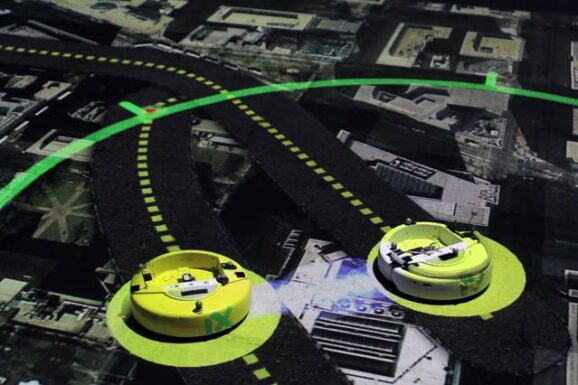

The scientists used a simpler task than the one that stymied the robot before—this time, instead of saving a human, they only had to reach the other side of the room without crashing into the “pedestrian.” Thus, what the robot has to “think” about is the best route, the one that will both minimize an encounters with the pedestrian while getting it across the room as quickly as possible. Thanks to a new visualization system, called “measurable virtual reality” (MVR) by its creators, scientists can see the robots “thoughts,” or at least their process.

The new system uses projectors on the ceiling, motion-capture technology, and animation software. As the robot tries to figure out the best route, the MVR system projects onto the ground a large pink dot that follows the pedestrian, indicating the robot’s understanding of the pedestrian’s position. Different lines are projected on the floor, showing the possible routes the robot might choose, and the best route is shown in green. The lines and dot move around as both the robot and the pedestrian shift.

The system is reminiscent of the probabilities that pop up on the screen as Watson weighs the best possible answers to a Jeopardy question. That gives viewers some idea of how Watson’s search mechanism works, and this does the same, except instead of answering a trivia question, the robot makes calculations based on its perceptions and its programming. Most of the time, scientists can’t tell why robots do what they do, but according to MIT Aerospace Controls Lab postdoc Ali-akbar Agha-mohammadi, “if you can see the robot’s plan projected on the ground, you can connect what it perceives with what it does to make sense of its actions.” This allows robot designers to “find bugs in our code much faster.”

Seeing what the robot’s thinking in real-time can help avoid crashes, as codes can be terminated before any disasters occur. This may help designers identify and fix problems more quickly as well, which would also aid in the development of driverless cars and other autonomous robots, such as drones that fight fires.