Deep Learning Algorithm Can Find A McDonald’s Much Faster Than You

This article is more than 2 years old

Okay, I’m seriously frustrated. I’ve been trying to find this McDonald’s for a while now. I know it’s nearby, but I just can’t seem to figure out where exactly it is, and it’s driving me crazy. This is a brand new experience for me—I’ve never in my life sought out a McDonald’s. I’ve also never tried to put myself in the place of a computer, or more accurately, a deep learning algorithm, to try and navigate. And I can say, it’s damn hard. Of course machines are better than humans if we go about it like this.

Okay, I’m seriously frustrated. I’ve been trying to find this McDonald’s for a while now. I know it’s nearby, but I just can’t seem to figure out where exactly it is, and it’s driving me crazy. This is a brand new experience for me—I’ve never in my life sought out a McDonald’s. I’ve also never tried to put myself in the place of a computer, or more accurately, a deep learning algorithm, to try and navigate. And I can say, it’s damn hard. Of course machines are better than humans if we go about it like this.

Let me back up—exposition is important here. MIT’s Computer Science and Artificial Intelligence Laboratory, also known as CSAIL, wanted to see if they could get computers to make decisions similar to the ones humans make in the context of our environment. For example, when we’re walking in a new city we might assess the safety level of a particular neighborhood, or when we pull off the highway in need of gas we might decide we’re more likely to find a station if we turn left rather than right at a stoplight. We might not consciously think all that hard about these decisions because we’ve made them countless times before, but our brains are actually factoring in a bunch of information, such as the state of the buildings and houses in the neighborhood or the number of people on the streets, or the direction from which we can hear street noise. We then proceed accordingly.

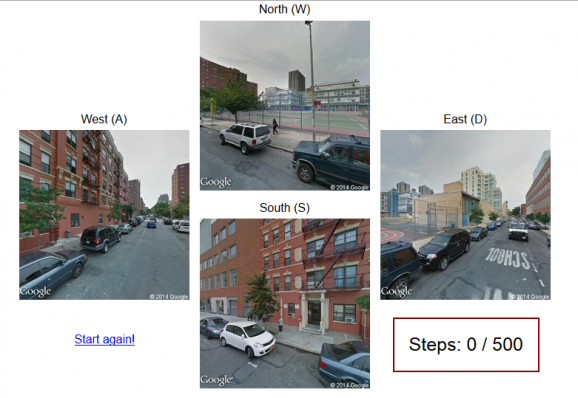

CSAIL’s question is whether or not computers can do the same thing, which involves them “seeing” their surroundings. And if this is possible, can computers make those decisions better than we can? The answer to the first question is yes, computers can do the same thing. The second question is more difficult to answer, hence CSAIL’s online demo, which asks users to find a McDonald’s. I thought it would be easy, but I was wrong. The demo gives the following instructions:

Imagine you are dropped at an arbitrary location in a city.

Without using the GPS or your phone, you need to find McDonald’s using only the visual cues around you.

By clicking the images on the left (‘W’:North, ‘A’:West, ‘S’:South, and ‘D’:East), navigate around the city until you find McDonald’s.A message will show up to let you know you have found it. Good luck, and safe travels! Note: You do not need to follow the street!

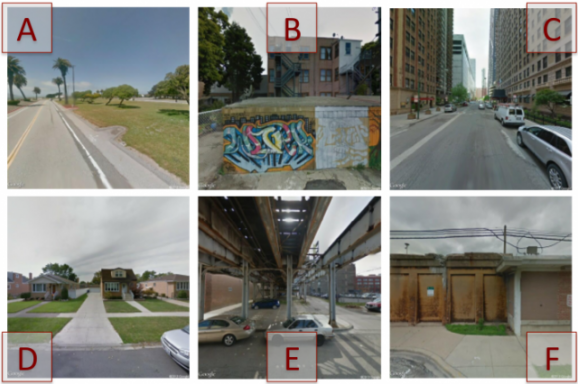

Sounds easy enough, right? Except it’s not. I virtually wandered the streets looking for the otherwise ubiquitous McDonald’s and never found one, perhaps in part because I find the interface to be, for lack of a better term, wonky. I don’t process visual information like a computer does, but apparently some people do. CSAIL says humans actually performed better on the “find the McDonald’s” task, but that computers did better on a variation of that involved assessing which of two photos was closer to the McDonald’s.

Of course, the computer has access to all kinds of information we don’t. In developing the algorithm, researchers gave the machines access to 8 million Google images from major American cities, as well as embedded GPS data with regards to the locations of the McDonald’s and the corresponding crime rates.

That part isn’t all that different from the way Watson pulls up information, but the impressive part is that the deep learning algorithm essentially teaches itself how to correlate the photos with the data. The program learned—by itself—that taxis, police vehicles, and prisons are frequently near McDonald’s restaurants (yay?), but that bridges, cliffs, and sandbars aren’t. Okay, so maybe that last part doesn’t seem particularly brilliant, but on a bigger level, we’re talking about computers making inferences and connections which lead to identifying patterns and the ability to make accurate predictions.

That part isn’t all that different from the way Watson pulls up information, but the impressive part is that the deep learning algorithm essentially teaches itself how to correlate the photos with the data. The program learned—by itself—that taxis, police vehicles, and prisons are frequently near McDonald’s restaurants (yay?), but that bridges, cliffs, and sandbars aren’t. Okay, so maybe that last part doesn’t seem particularly brilliant, but on a bigger level, we’re talking about computers making inferences and connections which lead to identifying patterns and the ability to make accurate predictions.

And now that I know what to look for, I’m headed back to the online demo. I’m going to find this damn McDonald’s.