Asimov’s First Law In Action: This Robot Can’t Figure Out How To Do The Right Thing

This article is more than 2 years old

Isaac Asimov’s first law of robotics is that a robot can’t harm a human or allow a human to come to harm. The purpose behind the law is to avert robot apocalypse scenarios and generally assuage people’s fear of artificial life. The problem with the law, though, is implementation. Robots don’t speak English—how would one code or program such a law, especially given how vague the notion of harm is? Does taking jobs from humans constitute harm? In Asimov’s short story “Liar,” a mind-reading robot realizes that harm can also be emotional, and lies to humans to avoid hurting their feelings, which of course only harms them more in the long run. All of this raises the bigger issue of whether robots can be programmed or taught to behave ethically, which is the subject of debate among roboticists. A recent experiment conducted by Alan Winfield of the UK’s Bristol Robotics Laboratory sheds some light on this question, and raises a new question: do we really want our robots to try and be ethical?

Isaac Asimov’s first law of robotics is that a robot can’t harm a human or allow a human to come to harm. The purpose behind the law is to avert robot apocalypse scenarios and generally assuage people’s fear of artificial life. The problem with the law, though, is implementation. Robots don’t speak English—how would one code or program such a law, especially given how vague the notion of harm is? Does taking jobs from humans constitute harm? In Asimov’s short story “Liar,” a mind-reading robot realizes that harm can also be emotional, and lies to humans to avoid hurting their feelings, which of course only harms them more in the long run. All of this raises the bigger issue of whether robots can be programmed or taught to behave ethically, which is the subject of debate among roboticists. A recent experiment conducted by Alan Winfield of the UK’s Bristol Robotics Laboratory sheds some light on this question, and raises a new question: do we really want our robots to try and be ethical?

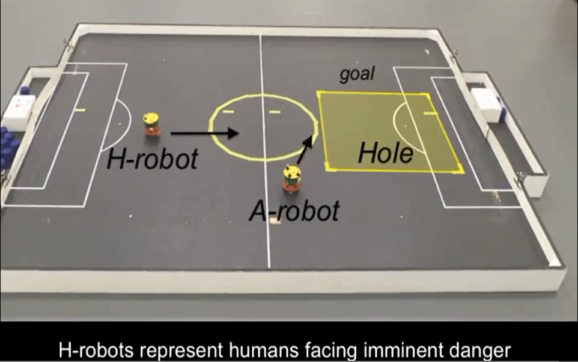

The experiment revolved around a task designed to exemplify Asimov’s first law. Only instead of interacting with humans, the robot subject interacted with robot substitutes. But the rule remained the same—the study robot, A, was programmed to move toward a goal at the opposite end of the table, and to “save” any of the human substitute bots (h-robots) if at all possible as they moved toward a hole.

Initially, the robot succeeded in bumping the h-robots out of the way. No problem. But then, Winfield added another h-robot, so the robot had to decide which one to save. And since the two h-robots looked the same, the robot couldn’t pick the most attractive one to rescue. It’s similar to the Asimov story “Runaround.” What’s a robot to do?

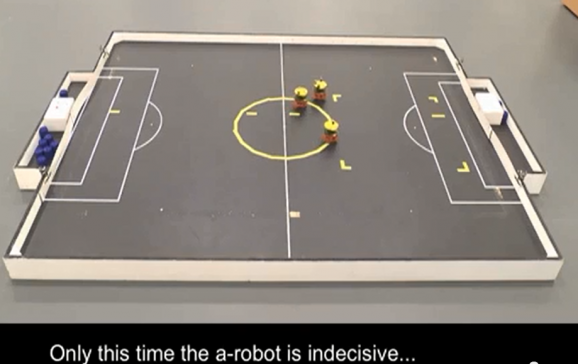

Well, it did pretty much what a human would do. Sometimes it picked one h-robot and saved it, and the other one “died” (there are really no tricky solutions available here, despite those of us who think we’d somehow be able to save them both). But 14 out of 33 times, the robot couldn’t decide which to save, and pondered the decision for so long that both h-robots died. Hang on here—isn’t the whole point of robots and machines that they aren’t plagued by indecision like we are? What’s the use of an ambivalent robot, especially when lives are on the line?

Well, it did pretty much what a human would do. Sometimes it picked one h-robot and saved it, and the other one “died” (there are really no tricky solutions available here, despite those of us who think we’d somehow be able to save them both). But 14 out of 33 times, the robot couldn’t decide which to save, and pondered the decision for so long that both h-robots died. Hang on here—isn’t the whole point of robots and machines that they aren’t plagued by indecision like we are? What’s the use of an ambivalent robot, especially when lives are on the line?

The robot wasn’t really thinking anything. It’s trying to obey its programming, but doesn’t know how. It’s not quite the same as trying to make an ethical decision, but it’s still interesting. Winfield called the robot an “ethical zombie,” though I think it’s interesting that over half of the time it chose to rescue one of the h-robots. It made two different decisions—but why? Winfield is flabbergasted by the results as well, and says that while he used to think robots can’t make ethical choices, now his answer is, “I have no idea.”

This is part of why some people are understandably freaked at the idea of robots making their own decisions on the battlefield. Still, some roboticists are trying to program robots to make ethical decisions in combat, such as whether or not to shoot. Um…no thanks. But roboticists will keep at it—until an ethical robot tells them to stop.